Is It Time to Revisit Your Software Management Practices?

Many organizations haven’t changed their approach to software management since the early 2000s

Distributing and patching software are two of the most important tasks assigned to an organization’s IT team.

Many tools are designed to handle those tasks, which fall under the general heading of software management.

But what often goes unrecognized is that regardless of the tools IT may choose for the job, process and policy issues need to be addressed before an organization can really take control of its software environment.

And many organizations haven’t changed their approach to software management since the early 2000s. In this blog, I point out several of these process considerations and explain their importance.

Centralized software deployment and patching are the most effective

From my experience, enterprises that use a decentralized model for managing software have much worse security hygiene than companies that use a centralized model.

And by centralized, I mean an organization may have decentralized support staff, but one centralized authority that determines what software and software updates will be installed and when. The distributed support teams all march to that drummer.

Centralized software deployment

Centralization supports testing and patching speed

One of the most important aspects of software management is testing and patching speed. As you transition to a centralized model, you want to have a staged or phased rollout. You should have a few testing phases before promoting to production. A few means two or three, not 20!

The point is, you don’t want your endpoint devices vulnerable for too long, so you want to get those updates out as quickly as possible. In general, a realistic goal is 7-15 days, and it may be less depending on the criticality of the update.

That’s the policy side of software management. Define service-level agreements (SLAs) for your most critical patches. Get the number as low as possible. Don’t base a number on what you’ve done for the past 20 years. Be aggressive in your goals.

30 days is a long time

If I walk into a company and its patching cycle is longer than 30 days, step one is to get these updates released and installed on all the devices in 30 days. It should be no worse than that.

You have to understand that when these updates come out, the bad guys see them, too. The guys who want to infiltrate your systems can read the documentation; they can see what’s getting patched and why. The clock is immediately ticking, and you need to update your software before exploits are developed and used. That’s why I say 30 days is a long time.

Now, you may not always want to deploy patches immediately to your production systems. Perhaps you have challenges with internal application dependencies. For example, .Net patches may not play nice with one of your custom homegrown applications. These things are going to happen.

There’s typically a range of about a week where you can test for those conflicts. And ultimately, you want to get that 30 days down to as close to a week as possible. That should be the goal.

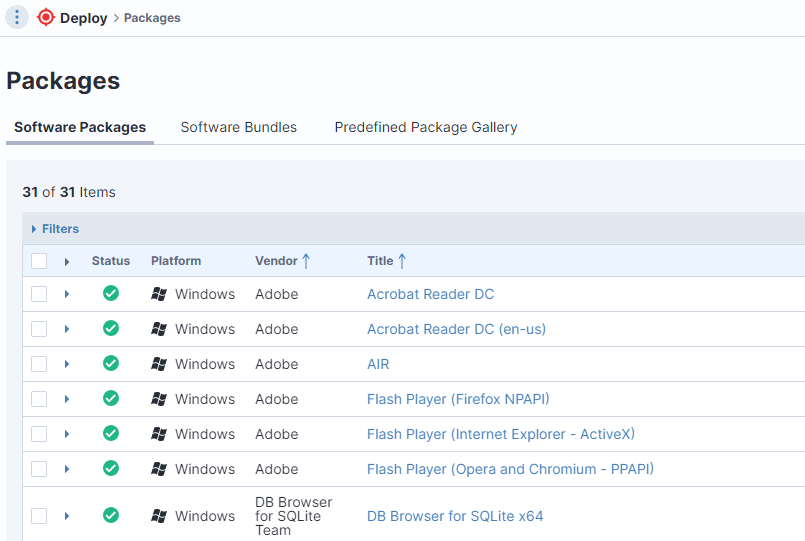

Comprehensive view of eligible updates

Just how bad is it? The world of “catchup patching”

What’s been eye-opening to me over the years is how many enterprises think they’re doing great or at least not badly. They give themselves a “B+.”

But then we perform an assessment and discover thousands of critical patches from as far back as 2007. Obviously, something’s not working. It’s usually a combination of tools and processes. Both must be addressed.

For many companies, the patching methodology they’re using today is the same as it was in 2007 or 2010. But the world they’re operating in has changed. They’ve got a decade or more worth of missing patches, whether they knew about them or not.

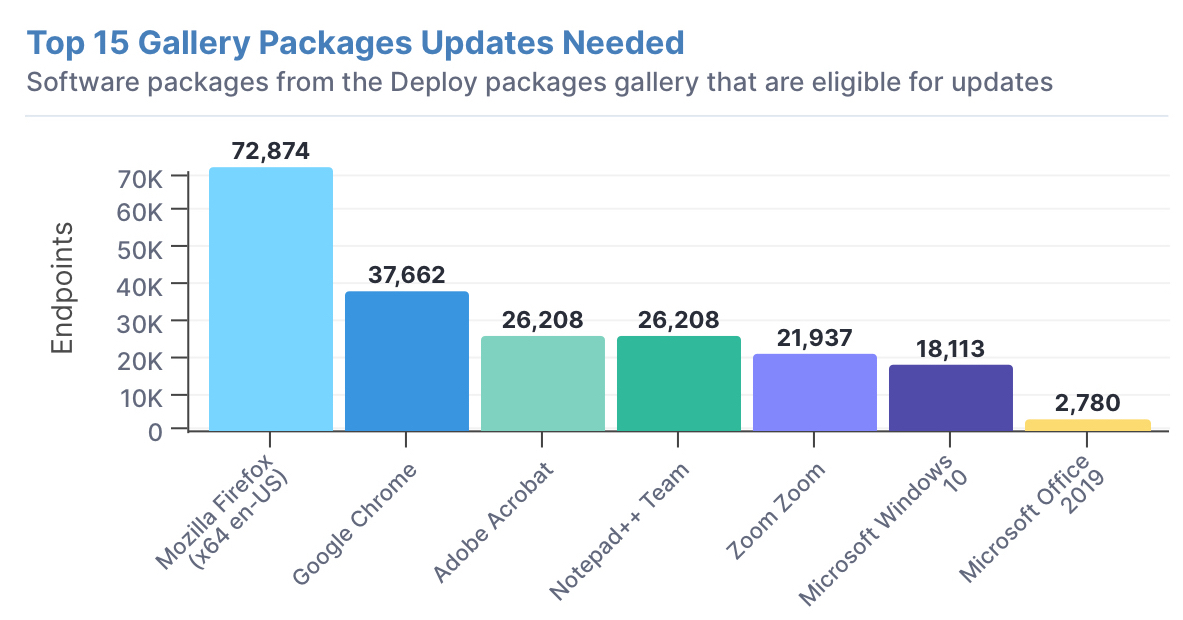

It’s not uncommon to start with critical and important security updates for your operating systems. These updates all have severities associated with them, so you can use those to get an idea of how vulnerable your operating systems are.

There are also severities associated with third-party application updates, such as Adobe Acrobat (see our blog about EOL for Adobe Flash), web browsers like Chrome and Firefox, and productivity apps like Office365.

For “catchup patching,” start with the most critical and important updates. In a distributed environment, this gives you the most bang for your buck, but don’t stop there.

Technical assessment of software management shortcomings is easy — solving them is not

It doesn’t take long to identify gaps in software distribution and patching methodologies. But getting consensus on the policies and processes that will address those gaps can be time-consuming and contentious.

People build careers on doing things one way and often they don’t want to change. In many cases, until they really begin to evaluate and reflect upon their policies, they don’t even realize that the only reason those policies exist is that “we’ve always done it that way.” One of the areas where you’re likely to encounter the biggest pushback is with system reboots after an update.

That’s because system rebooting affects end users. It can literally take months for organizations to decide about how to reboot endpoints while giving proper notification — not rebooting somebody when they walk away for lunch or in the middle of a presentation or while working on a critical document.

This is where strong executive leadership is essential to enforce reboot policy because, especially in the Windows world, a patch is not truly installed and the vulnerable code replaced until a system or application reboots.

Some organizations leave it up to the end user to reboot, and there’s no notification that a reboot is needed. End users will adapt to stronger policies if they are enforced.

They will learn to reboot the first or second time they get a notification — perhaps before lunch — instead of clicking “Postpone” right up until the reboot is enforced at an inopportune time.

When we work with a customer on software distribution, one of the first things we look for is the last reboot. And we’ll often find 75 percent of systems in an organization are pending a reboot. So, those patches you applied? They’re really not.

Risk is part of every conversation about software patching

The primary reason organizations don’t patch quickly is fear they’ll break something. When I talk with VPs and C-level executives about software updating, risk comes into every conversation. “What’s the risk of my system being vulnerable for 45 days versus the risk of me deploying something in 30 days and breaking my application?” It’s fear of the unknown. It’s fear of business disruption.

If you have a test plan and metrics, then you can reduce that fear. Even if that test plan is simply “Let’s deploy this update to a bunch of systems and see if anything breaks.” But you should be able to tell if something broke. You should have that data and not have to rely on someone opening a trouble ticket.

If application owners have hard data that patches are safe, they’re going to be more willing to sign on when you say you’re going to shrink your patching cycle from 30 to 15 days.

For a look at how Tanium simplified software management, be sure to check out 9 Ways Tanium Gives You Control of Your Endpoint Software Environment.

If you’re ready to see it in action, sign up for a demo today.